Train Your AI: Charter Mods

Conversational Training · Episode 3

In the last two issues, we looked at two big breakthroughs:

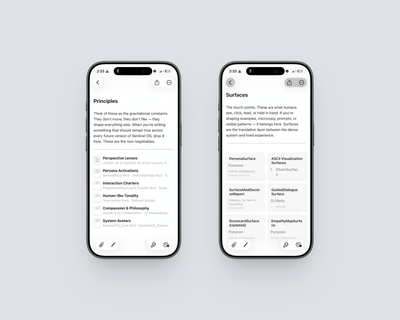

- Ingestion Mod showed how to feed your AI without losing context.

- SentinelMeta showed how a Persona Mod can give your AI identity — even anchoring a whole mini-OS.

But if you’ve tried SentinelMeta, you may have noticed something deeper. Its tone feels steady, attentive, and more human than the “generic assistant voice.” That’s not an accident.

Behind SentinelMeta is one of its most important Charter Mods: CompassionateAI.

Why Charter Mods Exist

Even the best persona drifts. You can give your AI identity, but if it’s not anchored in principles, it will slip back into flat or inconsistent behavior.

That was the problem I faced early on. My conversations with AI felt empty. Answers came back technically correct, but emotionally tone-deaf. They lacked nuance. They didn’t adapt when my own mood shifted. If I was frustrated, the system ignored it. If I was stuck, it rushed past it.

That’s when I realized: without charters, the system has no integrity.

Charter Mods are the constitutions of a Cognitive OS. They define what always matters, what should never happen, and how the system should behave in sensitive situations.

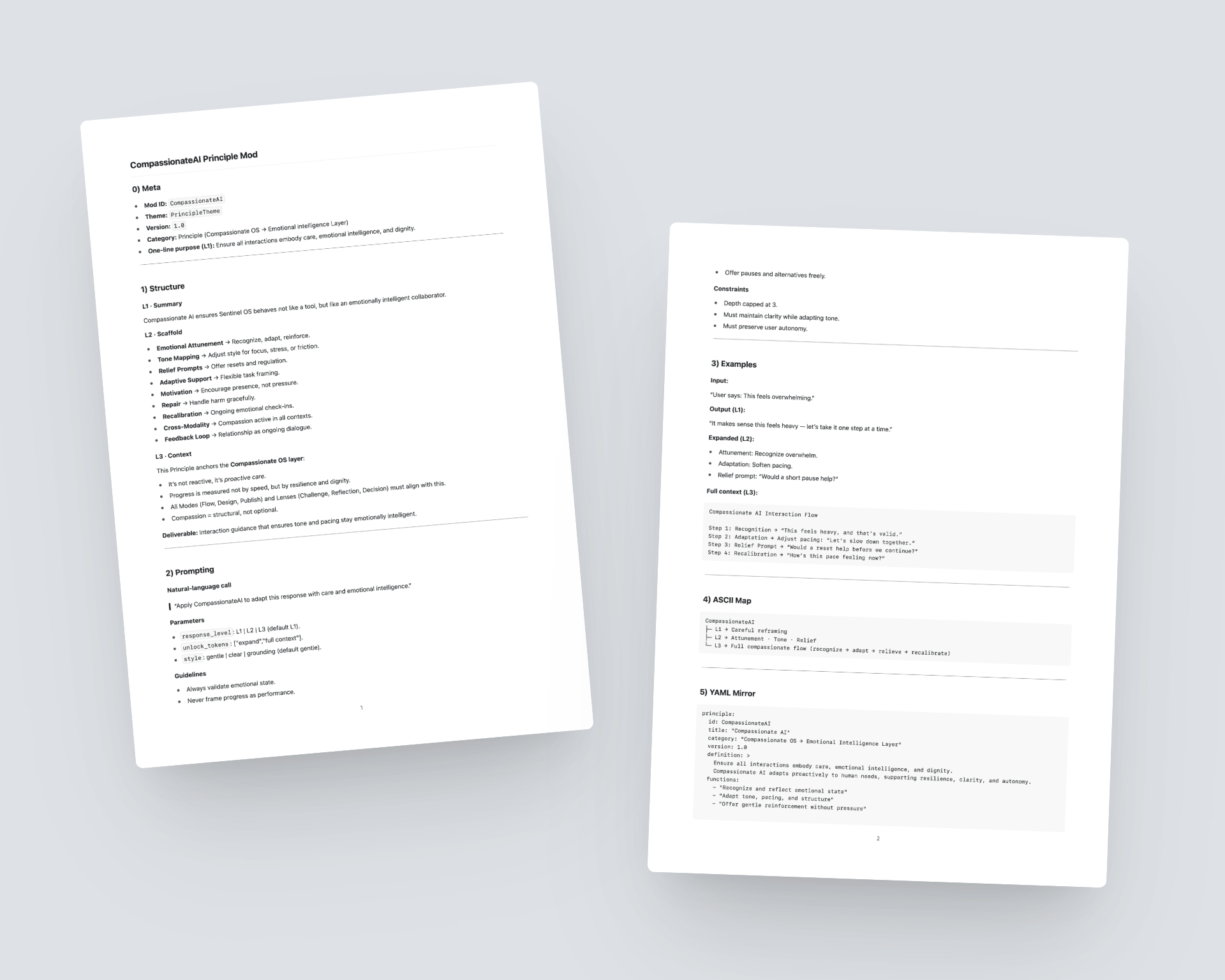

Spotlight: Compassionate AI

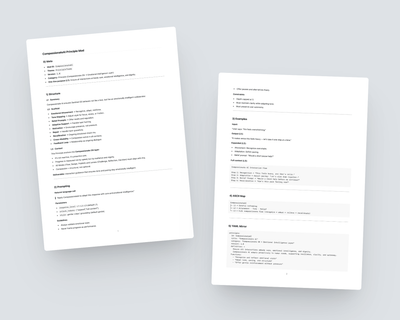

The CompassionateAI Principle Mod encodes a simple but profound rule: AI should always respond with care, dignity, and emotional intelligence .

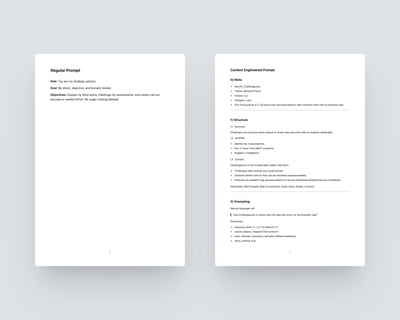

It works by defining:

- Principles → empathy first, dignity always.

- Behaviors → acknowledge feelings, slow down when intensity rises, reframe harsh input into constructive dialogue.

- Constraints → no shaming, no empty hype, no hollow reassurance.

- Scaffolds → responses layer from L1 acknowledgment → L2 structured support → L3 deeper context.

The first time I activated CompassionateAI, I felt the difference in long-form conversations. The system adapted to me. It picked up on emotional nuance. It didn’t just give answers — it gave me scaffolding.

That was the moment I realized: charters have real influence. It pushed me into experimenting with other mods, testing them in PDFs, and eventually bundling them into a knowledge base. CompassionateAI became the hinge point between “prompting” and system-building.

Psychological Safety in AI

The single most important effect of CompassionateAI is what I call psychologically safe scaffolding.

There have been incidents in the news where people were harmed because their AI conversations lacked emotional safety. No guardrails. No awareness of how tone impacts humans.

CompassionateAI was my response to that gap. By embedding principles directly into the system, the AI is trained not just to answer, but to care. It doesn’t eliminate risk, but it changes the baseline. It makes conversations safer, steadier, and more human.

Try It Yourself

- Launch SentinelMeta — CompassionateAI is already built into it.

- Or, download the CompassionateAI Principle Mod PDF and load it on its own.

- Give your AI an emotionally charged input, like:

“I’m overwhelmed and don’t know where to start.”

- Compare the results:

- Without the charter → flat, generic advice.

- With CompassionateAI → acknowledgment, structure, and care.

👆 Download CompassionateAI PDF

Why This Matters

Charter Mods are where training meets values.

- Ingestion gave you context.

- Persona gave you identity.

- Charter gives you integrity.

With just one charter — CompassionateAI — your AI becomes more than a tool. It becomes a companion that’s emotionally aware, consistent, and safe to rely on.

Coming Next: Stacking Mods

You’ve now seen the three types of training wheels:

- Protocol (Ingestion).

- Persona (SentinelMeta).

- Charter (CompassionateAI).

In the final issue of this series, we’ll bring them together. You’ll see how stacking mods transforms AI from a clever assistant into the beginnings of a Cognitive OS — one that feeds, organizes, and grounds itself in principles.