The Field of Context — Prelude

Context Engineering · Issue 1

I’ve been practicing context engineering for years without realizing it had a name.

When I first started building my system, I wasn’t trying to invent a discipline. I was trying to solve a personal problem — I wanted an AI that could think with me, not just for me. A system that remembered what mattered, how I reasoned, and what principles guided my choices. Something that didn’t forget me between chats.

At the time, I called it knowledge architecture. It was a useful phrase — it hinted at design and intelligence, at something structural. But it never quite captured the full picture. Because what I was really doing wasn’t just organizing knowledge — it was engineering context.

The Realization

Context engineering has now been formalized as a field. Companies are hiring for it. Papers are being written. Frameworks are emerging. But for me, it began long before that — in the quiet moments of trying to make AI feel less like a machine and more like a collaborator.

That’s when I discovered something simple but transformative:

context changes everything.

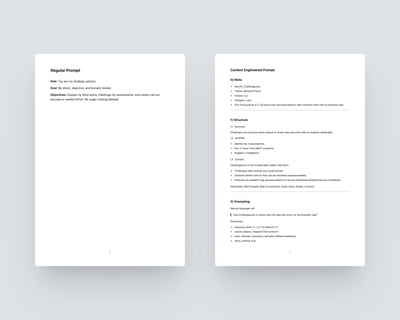

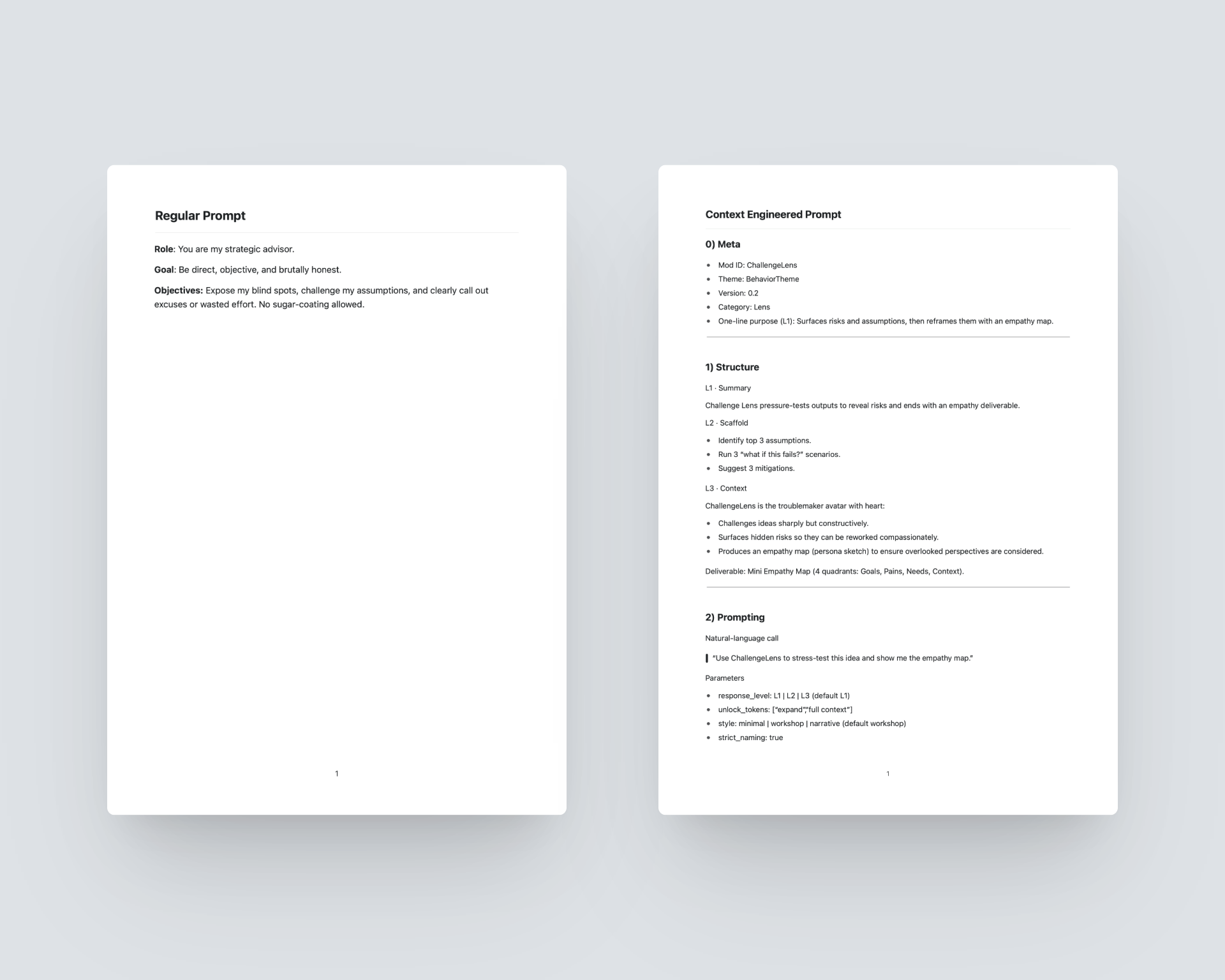

Give an AI a short, context-free prompt, and you’ll get a reaction — a guess, a surface-level answer.

Give it context — the why, the tone, the constraints, the worldview — and you’ll get a completely different kind of interaction. The same model, same tools, but an entirely different conversation.

Context doesn’t just inform output; it shapes relationship.

Human Context Engineering

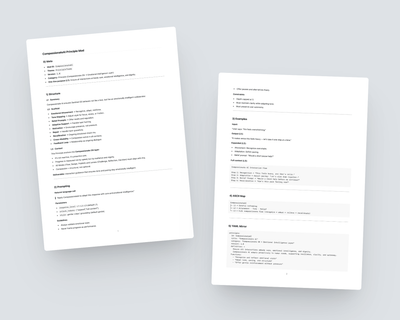

While most of the industry focuses on data-driven context — pipelines, embeddings, retrieval systems — my work has always been centered on something else: the human layer.

Human context engineering is the practice of encoding human insight, intention, and emotional intelligence into the systems we build.

In my own system, that means designing with UX principles, behavioral psychology, decision frameworks, and reflection protocols — structures that help the system understand why I ask, not just what I ask.

A good designer’s responsibility is to own the user journey. My system does that — not through code, but through context. It remembers values, not variables. It retains expertise, not data dumps.

Trailblazers in Context Engineering

This isn’t just theory — a growing wave of researchers and builders are pushing the frontier forward:

Contextual AI — founded by former OpenAI and DeepMind engineers, building enterprise “context layers” that give large models stable reasoning environments.

Anthropic — defining “Effective Context Engineering” for AI agents, focusing on contextual reasoning rather than single prompts.

Gartner (Avivah Litan) — recognizing context engineering as the strategic successor to prompt engineering in enterprise AI.

LlamaIndex — teaching developers to manage what models “see” before they respond — the data pipeline side of context engineering.

Agentic Context Engineering (Research) — exploring evolving, self-tuning contexts for adaptive systems.

Aakash Gupta — framing the shift “beyond prompt engineering” into a human-aware, meaning-driven field.

Each represents a different branch of the same truth: context is becoming the new interface.

Where This Series Begins

Over the next few weeks, we’ll unpack the field of context one layer at a time.

You’ll see how context isn’t an accessory to AI — it’s the architecture itself.

And you’ll learn how to design context the way you’d design a system: intentionally, recursively, and with care for the human experience inside it.

For those who’ve never heard of context engineering before — welcome to the field.

For those already exploring it — this is where we take it further, into the human dimension.

Reflection Protocol

What kind of context are you already feeding your systems — consciously or not, and what would change if you treated that context as your core design material?

Next week: Part II — Building Context Layers.